Is AI Slowing Us Down?

What a new study reveals about coding with LLMs—and why distractions might be costing us more than we think.

How much faster would you say you are when coding with AI? Maybe 50%? Or 30%?

Certainly no less than 20%, right? I mean, have you seen how fast Claude Code generates JavaScript??

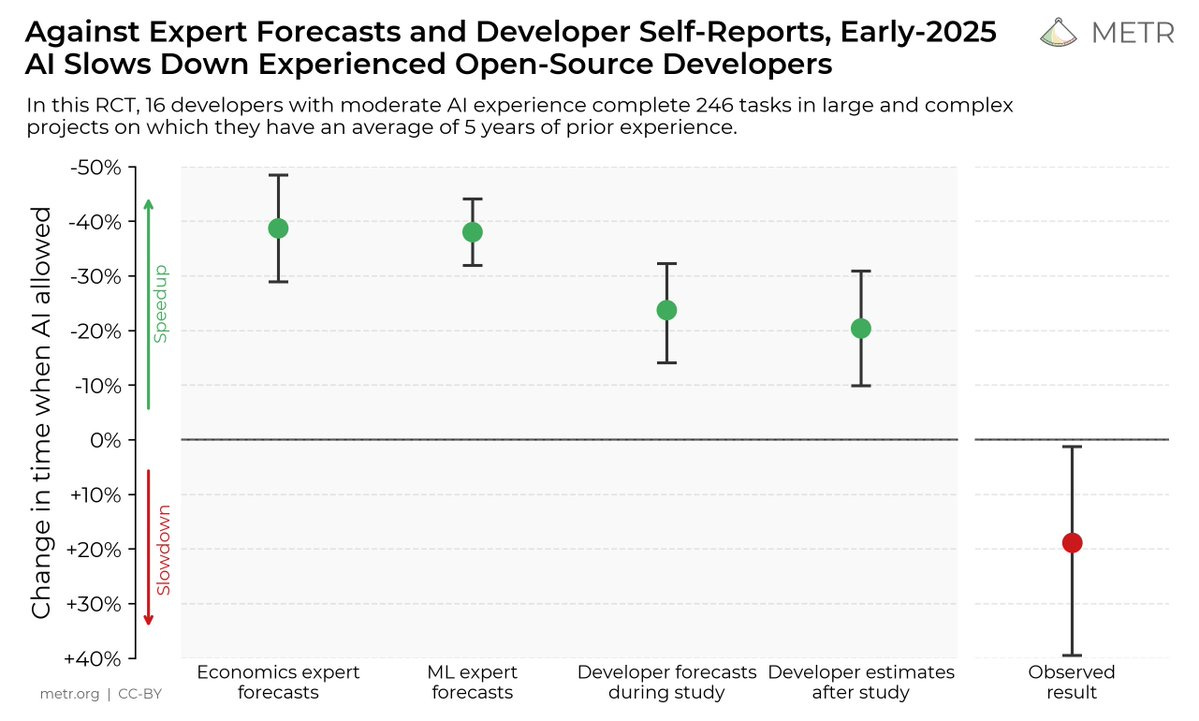

A group of researchers conducted a study over the last few months to get to the bottom of that question: They followed 16 developers working on 246 real-world tasks and measured how fast they completed them with and without AI.

Last week, they published their results in a research paper with a surprising observation:

AI didn’t make developers more productive at all. In fact, when using AI, developers took 19% longer to complete their tasks.

You read that right—AI slowed them down.

As someone who uses AI to write code daily, I found that really hard to believe. And yet, I can’t help asking myself, “Could this number be accurate? Have the benefits of AI been greatly exaggerated?”

There’s a lot to unpack from this study. So grab your lab coat and put on your scientist hat because this week we'll dive into the research, hear what some of the developers in the study have to say about the findings, and, more importantly, we'll talk about what we can learn from all of this.

Let’s jump in 🧑🔬

Takeaways

At the speed AI is moving, this post could be made irrelevant by the time you finish reading it, so let's talk about what we can learn from this study right away:

We tend to overestimate AI's usefulness. One thing that's clear from the study is that we can't trust our feelings when it comes to AI. Developers felt more productive when using AI, even though they took longer to complete tasks with it.

LLM over-use can do more harm than good. A common gotcha mentioned by developers (and observed by the researchers) was relying on AI for tasks where it didn't do a good job, and wasting time either trying to make it work or reverting the changes and starting over.

Downtime and distractions have a real cost. Waiting for the AI agent to finish generating code opens the door for distractions (typically unproductive distractions, like checking Instagram or X), which can be hard to come back from and take us out of the state of flow.

We'll return to discuss these later. Now, let's dive into the details of the research.

The Research

The study was conducted by a group of researchers at METR. It was a randomized controlled trial (RCT) designed to understand how AI tools affect the productivity of experienced open-source developers working on their own repositories.

They recruited 16 developers who maintained popular open-source libraries (including some very popular ones, like jsdom and huggingface's transformers) and followed them as they completed 246 tasks, which were randomly assigned to allow or disallow AI.

When AI was allowed, developers were encouraged (but not required) to use AI tools like Cursor and ChatGPT at their discretion. When AI was disallowed... well, they had to rely on their own organic brains and good old Stack Overflow.

Before starting each task, developers had to estimate how long they thought it would take them to complete the task with and without AI. On average, they forecasted that AI would help them complete their tasks in 24% less time. After the study, they were a bit less optimistic, but they still estimated that AI helped them do their job 20% faster.

But looking at the actual time it took them to complete those tasks, the researchers found something interesting: when AI was allowed, it took developers 19% longer to complete their tasks—it slowed them down.

But... that can't be right, right?

Every other study and benchmark out there reports productivity gains of 50%+ when using AI, and you can't open LinkedIn these days without reading at least 15 stories on how AI is making teams 10x faster.

If you're scratching your head about these results, you're definitely not alone. Here's Elizabeth Barnes, the founder and CEO of METR, with some hypotheses to explain these differences:

“We're still confused about what is going on here and what the "true" capabilities are, given conflicting evidence from benchmark performance, anecdotes, other studies etc. But I do think we've learnt a few things.”

The research does not say that many developers can't be faster when using AI, though. In fact, 25% of the developers in the study saw an increase in productivity when AI was allowed, with some completing tasks in 38% less time.

So, what sets these developers apart?

Thankfully, the research wasn’t only based on “vibes.” Part of the evaluation involved manually reviewing and labelling 143 hours of screen recordings to decompose how developers were spending their time. These recordings, in combination with source-code statistics and user reports, help us better understand what could be causing the slowdown.

Why the Slowdown?

It would be great if we could point at one thing and say, "Ohh, there it is. That's the reason for the slowdown", but unfortunately, there isn't one. It's more likely a combination of factors.

The study mentions five likely factors that explain why most developers saw a decrease in productivity:

Over-optimism about AI usefulness. Developers weren’t required to use AI at all times, only when they felt it would help them do their jobs faster. But the fact that they felt more productive with AI may have led them to overuse it.

High developer familiarity with repositories. The research found that developers were slowed down more on tasks where they already had some exposure and needed fewer external resources. Since these developers were deeply familiar with these repositories (5 years of experience on average), this was more often than not the case.

Large and complex repositories. Developers noted that LLMs performed worse on large and complex environments. These repos were on average 10 years old and had over 1 million lines of code, so as we might expect, the LLM’s performance wasn’t as good as in a greenfield project.

Low AI reliability. Developers accepted less than 44% of Cursor generations, and when they did accept them, they spent significant time reviewing and touching them up. One developer noted that he “wasted at least an hour first trying to [solve a specific issue] with AI” before eventually reverting all code changes and just implementing it without AI assistance.

Implicit repository context. Developers relied on a lot of implicit knowledge about the codebase that the AI didn’t have access to, which was hard to provide as context via a prompt.

Quentin Anthony, one of the developers who participated in the study, shared his thoughts on an X thread, mentioning some additional ways to explain the slowdown:

Solving a problem with AI can feel more enjoyable, even if it isn't actually more productive.

“I think cases of LLM-overuse can happen because it's easy to optimize for perceived enjoyment rather than time-to-solution while working.”

Knowing at which tasks LLMs perform better (and at which it performs worse) is crucial.

“I know what parts of my work are amenable to LLMs (writing tests, understanding unfamiliar code, etc) and which are not (writing kernels, understanding communication synchronization semantics, etc). I only use LLMs when I know they can reliably handle the task.”

LLMs can get distracted by long and irrelevant context that can cause them to go around in circles (also known as “context rot”).

“You need to open a new chat often. This effect is worsened if users try to chase dopamine, because "the model is so close to getting it right!" means you don't want to open a new chat when you feel close.”

Long-running generations open the door for time-wasters that can be hard to come back from.

“It's super easy to get distracted in the downtime while LLMs are generating. The social media attention economy is brutal, and I think people spend 30 mins scrolling while "waiting" for their 30-second generation.”

Along this last point, the research reveals a curious finding:

The screen recordings show that when AI was allowed, developers spent less time actively coding, reading, and researching (as one would expect), but more time in "idle/overhead." We don’t know what happened during this idle time, but given the developers’ testimony (and some first-hand experience), some of it could certainly be explained by "scrolling time while waiting for the 30-second generation."

Ruben Bloom, another of the study participants, shared similar thoughts:

“... I feel like historically a lot of my AI speed-up gains were eaten by the fact that while a prompt was running, I'd look at something else (FB, X, etc) and continue to do so for much longer than it took the prompt to run

I discovered two days ago that Cursor has (or now has) a feature you can enable to ring a bell when the prompt is done. I expect to reclaim a lot of the AI gains this way”

What can we learn from this?

Well, clearly, that we can't trust those reports from LinkedIn CTOs about how AI is making their teams 1,000% more productive.

But also, generalizing the research as “AI makes developers 20% slower” is the wrong lesson to take from the study.

First, because the study focuses on a specific type of development (maintaining open-source libraries with 1M lines of code), which isn’t representative of all the code being written out there. And second, because this field advances way too quickly for any one study to represent anything more than a snapshot in time.

That said, there are some valuable lessons we can take with us that will remain true for the foreseeable future. Let's go back to our main takeaways.

1. We overestimate AI's usefulness

The fact that developers thought AI made them more productive, even when it slowed them down, says a lot about the way we feel about these tools.

As Quentin Anthony puts it in his X thread:

"We like to say that LLMs are tools, but treat them more like a magic bullet."

It’s hard not to treat them as magic bullets, though. Seeing an LLM one-shot a bug fix that would have taken us hours to debug is genuinely fascinating. But this is the same fascination that may lead us to overuse them.

This over-optimism about AI also means that surveying developers on how much more productive they think they are with AI may not be as revealing as we think it is.

2. Over-using LLMs can do more harm than good

LLMs are great at certain tasks and not-so-great at others. They can significantly speed up implementation in some cases, and spin their wheels going nowhere in others.

A big part of learning how to use these tools is developing an intuition about which tasks LLMs are better suited for and which we'd be better off doing things manually (at least partially).

There's no instruction manual on how to develop this intuition. The only way to get better at it is to use it in your own workflows and experiment as much as possible, which, perhaps paradoxically, takes time and effort and chips away at our productivity gains.

3. Downtimes and distractions have a real cost

Distractions have always been developers' number one enemy, but with AI, the enemy is growing stronger and hairier and it’s charging at us at the speed of Brad Pitt in the F1 movie.

We now have a new category of "downtime" in our workflows as we wait for the AI to finish generating the code. This can be a good thing if we use that downtime productively... but we often don't.

To make matters worse, working with AI—particularly prompting and reviewing AI-generated code—doesn't put us in the same flow state that traditional coding produces, which makes it much easier to get distracted by something else.

As Matheus Lima puts it in The Hidden Cost of AI Coding:

“Instead of that deep immersion where I’d craft each function, I’m now more like a curator? I describe what I want, evaluate what the AI gives me, tweak the prompts, and iterate. It’s efficient, yes. Revolutionary, even. But something essential feels missing — that state of flow where time vanishes and you’re completely absorbed in creation.”

This type of downtime isn't much different from the classic "waiting for the build to finish" or “waiting for my tests to inevitably fail in CI,” but the more we rely on AI agents for our workflow, the more frequent it will be.

For those who are good at context-switching, the extra downtime can be a good opportunity to get a head start on a related task or catch up on email. For those of us who suck at context-switching, the best use of this time might be to just take a little break.

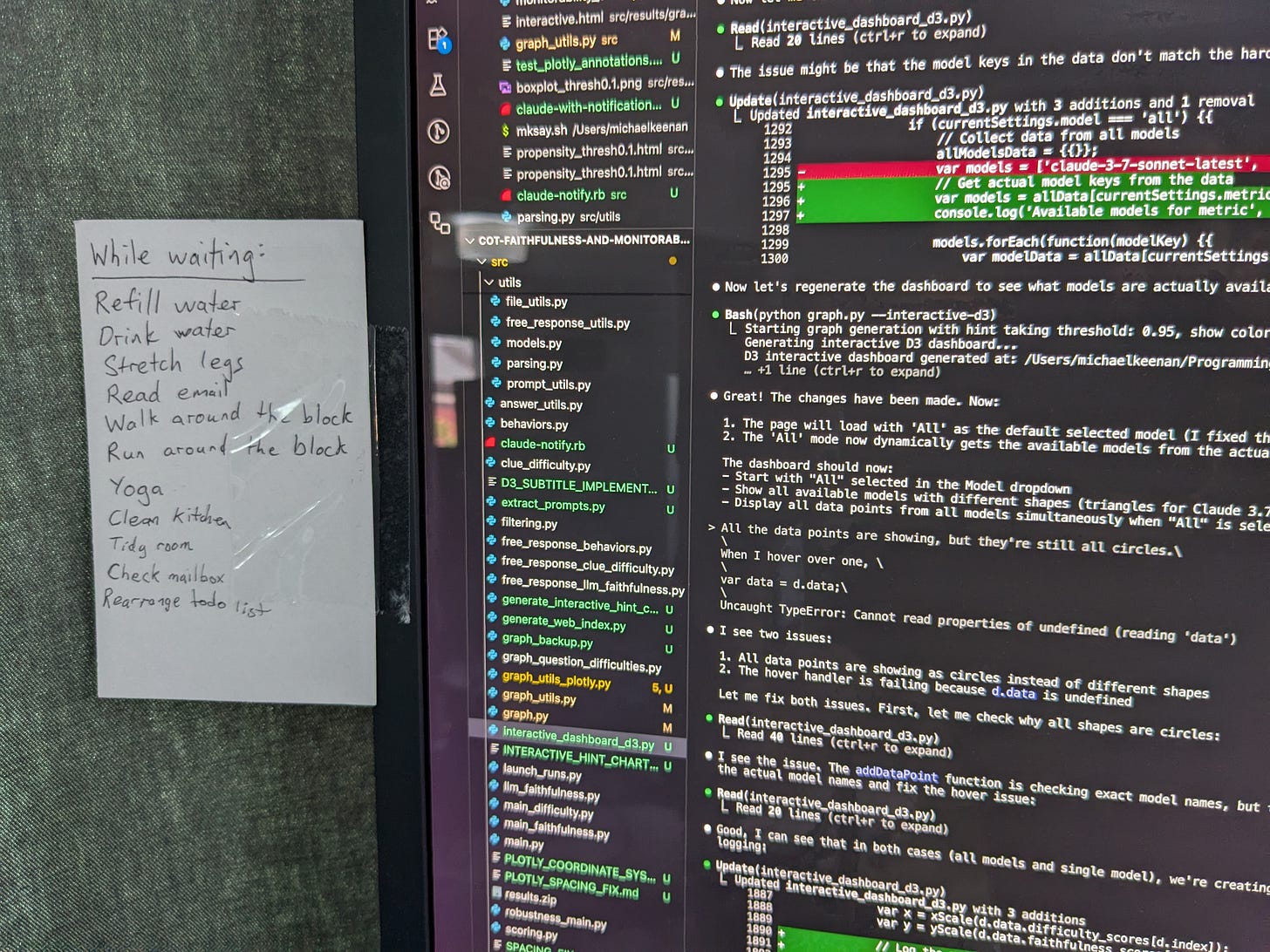

Keeping a list of “downtime activities” next to your monitor might be a good idea (stolen from Michael Keenan):

You've reached the end of the article, which means that everything you read has been made irrelevant by the 520 new models that came out since you started reading it.

I'm only half-joking here. As with all things AI, the results of this research will get outdated as tools and LLMs continue to improve, and, particularly, as developers become more familiar with them.

But regardless of how fast technology advances, the underlying lesson of this study will continue to be true for a long time:

AI has an incredible amount of potential to make us more productive. But actually realizing that potential—and making the most of it—continues to be up to us.

I saw this study, too, and while it's helpful to know this, I feel like AI could be 2-3x slower than if we were to do it manually and I'd still use it, because I'll never be able to be faster than AI if it's working for me while I'm going for a walk, making a coffee or food, helping people in Slack, responding to emails, drafting docs, etc. I can always have AI working in the background while I'm doing something else, so it's still higher output in total.

Still, when using AI, you need to recognize when it's just plain stuck and needs a different approach or needs you to jump in and do it yourself.

This was such an eye-opening read. I appreciate the balanced perspective, Maxi.

It’s a good reminder that the tools are only as helpful as the way we use them.

Slowing down to notice where AI adds value (and where it doesn’t) feels more important than ever.

Thank you for putting this together with so much clarity🙏💡